Look, if you’re running a business in the UK or Germany and dipping your toes into AI—whether it’s chatbots for customer service, predictive analytics for hiring, or something more advanced like facial recognition—you can’t ignore the EU AI Act 2026. It’s basically the world’s first big swing at regulating AI, and it’s hitting full stride by August 2026, when most of the heavy rules kick in. Mess this up, and you’re looking at fines that could climb to €35 million or 7% of your global revenue, whichever stings more. I’ve seen companies scramble last-minute with stuff like GDPR, and trust me, it’s not pretty. This guide breaks it down simply, with real tips to keep you compliant without turning your operation upside down.

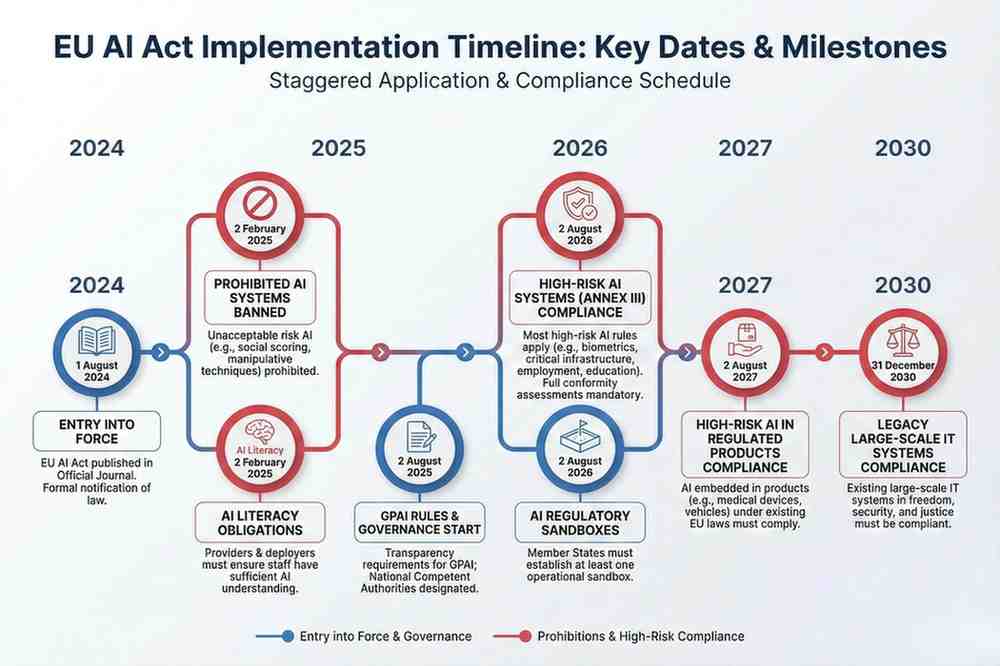

The Act isn’t just some far-off Brussels bureaucracy; it’s already rolling out in phases, starting with bans on the really risky stuff back in February 2025. By 2026, high-risk AI systems—like those used in hiring or credit scoring—will need full compliance. For German firms, this is straight-up law since Germany is in the EU. UK businesses? You’re out post-Brexit, but if your AI touches EU markets or users, you’re still on the hook. Think about it: a London-based SaaS company selling to Berlin clients could get nailed if their tool isn’t up to snuff.

What Is the EU AI Act 2026 and Why Should You Care?

At its core, the EU AI Act 2026 sorts AI into risk buckets: unacceptable (banned outright), high-risk (strict rules), limited-risk (basic transparency), and minimal-risk (mostly hands-off). It’s all about protecting folks’ rights while letting innovation roll. Prohibited stuff includes things like government social scoring or AI that manipulates behavior in sneaky ways—think subliminal ads on steroids. High-risk covers AI in education, employment, or critical infrastructure, where screw-ups could hurt people.

Fines are no joke. Breaking bans? Up to €35M or 7% turnover. Other slip-ups, like bad data handling in high-risk systems, could hit €15M or 3%. And yeah, this applies extraterritorially—if your AI’s output lands in the EU, you’re in. I’ve chatted with a few execs who’ve dealt with similar regs, and they say the key is starting small: map out your AI uses before the deadlines bite.

The rollout? It started in August 2024, with full high-risk rules by August 2026. Penalties enforceable from August 2025. For small businesses, there’s some relief like sandboxes for testing, but don’t count on leniency if you’re big.

Risk Categories at a Glance

Here’s a quick table to wrap your head around the risks—makes it easier to spot where your tools fit.

| Risk Level | Examples | Key Obligations | Potential Fines |

|---|---|---|---|

| Unacceptable | Social scoring, real-time facial recognition in public (mostly) | Banned | Up to €35M / 7% turnover |

| High-Risk | AI in hiring, credit decisions, medical devices | Risk assessments, data quality, human oversight, docs | Up to €15M / 3% turnover |

| Limited-Risk | Chatbots, deepfakes | Tell users it’s AI | Up to €7.5M / 1% for false info |

| Minimal-Risk | Spam filters, basic games | Voluntary best practices | Minimal, but watch overlaps like GDPR |

This setup keeps things proportionate, but overlooks a high-risk label, and you’re toast.

Why UK and German Businesses Are Front and Center

German companies? You’re EU insiders, so compliance is mandatory. The German government is already setting up oversight bodies, and by August 2026, you’ll need sandboxes operational for testing. A buddy of mine in Munich runs a logistics firm using AI for route optimization—high-risk territory—and he’s already auditing datasets to avoid bias fines.

For UK outfits, it’s trickier but no less real. Brexit means you’re not bound directly, but if you sell to EU customers or your AI affects them, the Act applies. Take a Manchester startup exporting AI software to Frankfurt: non-compliance could block market access or trigger penalties. I’ve heard stories of UK firms aligning voluntarily to stay competitive—smart move, as the UK might mirror some rules down the line.

Bottom line, whether you’re in Berlin or Birmingham, ignoring this could tank partnerships or invite lawsuits. And for US-based readers eyeing European expansion, treat this as your roadmap to avoid headaches.

10 Essential Tips to Nail EU AI Act 2026 Compliance

Alright, let’s get practical. These tips aren’t pie-in-the-sky; they’re drawn from what real companies are doing to dodge those massive fines. Start with the basics and build up—I’ve seen teams save months by tackling inventory first.

Tip 1: Inventory Your AI Systems Right Now

Don’t guess—list every AI tool you use or build. What’s its purpose? Data inputs? Outputs? Classify by risk using Annex III of the Act. A German manufacturing firm I know found that a “low-risk” predictive maintenance AI was actually high-risk because it tied into safety systems. Skip this, and you’re flying blind.

Tip 2: Classify Risks Accurately

Use the Act’s guidelines to bucket your AI. High-risk? Prep for assessments. Pro tip: If it’s in employment or finance, assume high-risk until proven otherwise.

Tip 3: Build a Solid Risk Management System

For high-risk stuff, ongoing assessments are key. Identify biases, test for failures, and mitigate. Human oversight is huge—train staff to override AI when needed.

Tip 4: Focus on Data Quality and Governance

Garbage in, garbage out. Ensure datasets are diverse, bias-free, and GDPR-compliant. UK firms, link this to your Data Protection Act for efficiency.

Tip 5: Amp Up Transparency and Explainability

Users need to know when AI is at play. For high-risk, explain decisions—like why a loan app got denied. Tools like explainable AI models help here.

If you’re visual, check out this YouTube breakdown on getting compliant—it’s straightforward and covers the basics without fluff:

Tip 6: Document Everything Like Your Business Depends On It

Technical docs, risk logs, incident reports—keep ’em updated. This proves compliance during audits.

Tip 7: Nail Conformity Assessments

Self-assess or hire third parties for high-risk systems. Slap on that CE mark once done.

Tip 8: Set Up Post-Market Monitoring

Track AI performance after launch. Report serious incidents fast—delays amplify fines.

Tip 9: Train Your Team on AI Literacy

Everyone touching AI needs basics on ethics, risks. The Act mandates this from February 2025. Make it hands-on, not boring slides.

Tip 10: Leverage Resources and Stay Updated

Use EU sandboxes for testing, and codes of practice for guidance. For UK folks, check internal links like our GDPR alignment guide to overlap efforts. And don’t sleep on updates—things evolve.

Wrapping It Up: Stay Ahead or Pay the Price

Getting compliant with the EU AI Act 2026 isn’t about stifling innovation; it’s about building trust. Start early, involve your team, and treat it like a business edge—clients love responsible AI. I’ve watched companies turn this into a selling point, attracting EU deals they might’ve missed. If you’re proactive, those €35M fines stay fictional.

Key Takeaways

- Start with an AI audit: Know what you’ve got before rules tighten in 2026.

- Risk classification is everything: Get it wrong, and fines follow.

- Documentation saves the day: It’s your proof in audits.

- UK businesses aren’t exempt: EU market ties pull you in.

- Training isn’t optional: Build AI-savvy teams now.

- Resources exist: Sandboxes and guides lighten the load.

FAQ

Q: Does the EU AI Act 2026 really apply to my small UK startup if we only have a few EU customers?

A: Yeah, probably—if your AI’s used in the EU or affects folks there, you’re in scope. Better classify early to be sure.

Q: What’s the biggest fine risk for German companies under this?

A: Up to €35M for banned AI practices, but high-risk slip-ups can hit €15M. Focus on compliance basics to avoid.

Q: How do I know if my AI is high-risk?

A: Check Annex III—stuff like biometrics or employment tools usually qualify. Guidelines from the EU help clarify.

Q: Can I use existing GDPR setups for this?

A: Absolutely, overlaps in data handling make it easier. Just expand to AI-specific risks.

Q: When do I need to worry about general-purpose AI models?

A: Rules kick in August 2025 for new ones, 2027 for existing. If you’re building chatbots, document transparency.

Q: Are there any breaks for SMEs?

A: Yep, lighter fines and sandboxes for testing without full pressure.

Key Citations

- EU AI Act Guide: Compliance Steps for Your Business – AI Institute

- How to Achieve EU AI Act Compliance and Build Trustworthy AI

- Implementation Timeline | EU Artificial Intelligence Act

- EU Artificial Intelligence Act – What Is It And What Is It’s Impact On UK Companies

- State of the Act: EU AI Act Implementation in Key Member States