Look, I’ve been messing around with voice-to-text apps for years now, ever since I got tired of pecking away at my keyboard during long meetings. You know that feeling when you’re trying to jot down notes, but your fingers just can’t keep up? That’s where AI voice-to-text transcription accuracy comes into play—it’s supposed to make life easier, right? But how does it really stack up against good old manual typing? Well, from what I’ve seen and dug into, AI can hit around 85-99% accuracy in ideal setups, while manual typing by a pro often nails 95-100%. It’s not always a slam dunk, though; things like background chatter or a thick accent can throw it off big time.

Understanding AI Voice-to-Text Basics

AI voice-to-text, or speech recognition as some call it, has come a long way. It’s basically software that listens to your voice and turns it into text on the fly. Think about dictating an email instead of typing it out—saves your wrists, doesn’t it? But the accuracy? That’s the big question. In clear conditions, top tools boast high marks, but real life isn’t always crystal clear.

What Makes AI Transcription Tick?

These systems use fancy algorithms trained on tons of voice data. Services like Google Cloud or OpenAI’s Whisper process audio in seconds, picking up words, punctuation, even speaker changes sometimes. From my own tinkering, I’ve noticed they shine with straightforward English, but toss in some slang or jargon, and you might end up editing more than you bargained for. A quick check on tools like these shows accuracy hovering at 90-95% for everyday use.

Manual Typing: The Old-School Approach

On the flip side, manual typing—or human transcription—means someone listens and types it out themselves. It’s slower, sure, taking hours for what AI does in minutes, but the payoff is reliability. Pros can catch nuances that machines miss, like sarcasm or context clues. If you’ve ever transcribed an interview by hand, you know it’s tedious, but the end result is spot-on, often 99% or better. For stuff like legal docs or medical notes, this is still the go-to because mistakes aren’t an option.

Key Factors Affecting AI Voice-to-Text Transcription Accuracy

Not all audio is created equal, and that’s where things get tricky. I’ve had days where my phone nails every word, and others where it’s like it’s hearing a different language. Let’s break down what trips up AI voice-to-text transcription accuracy the most.

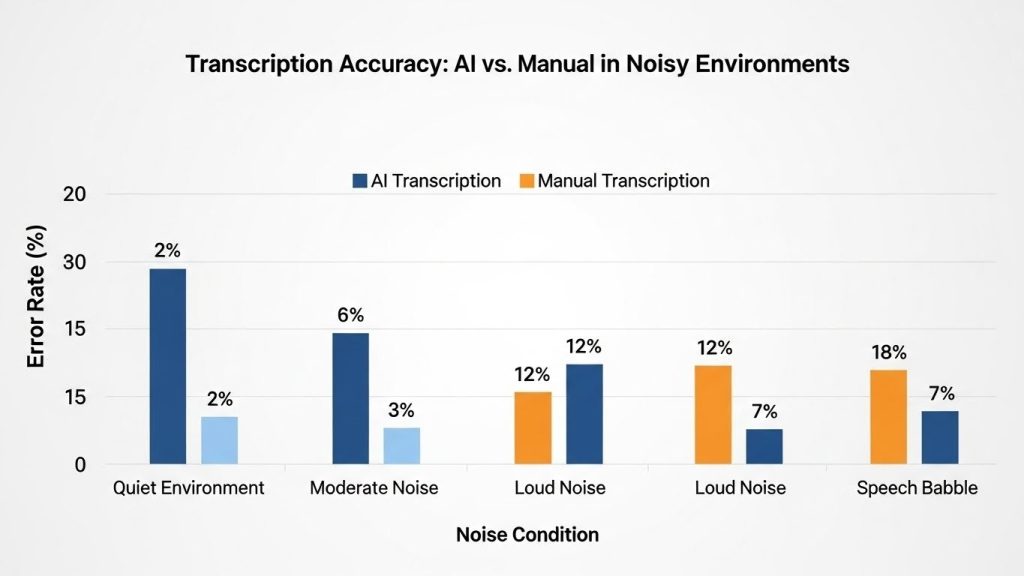

Audio Quality and Noise Issues

Background noise is a killer. Imagine trying to transcribe a podcast in a noisy cafe—AI might mix up “bear” with “beer” or worse. Studies show that with clean audio, accuracy jumps to 95%, but add echoes or chatter, and it drops to 80-85%. Manual typing? A skilled person can filter that out mentally, keeping errors low.

Accents, Dialects, and Multiple Speakers

Accents throw a wrench in things. If you’re from the South or have a non-native twang, AI might struggle more than with standard American English. One report I came across highlighted how tools like Otter.ai rack up more errors with multicultural speakers—up to 84 mistakes in a short transcript versus just 17 for manual. And if there’s overlapping talk? Forget it; AI often jumbles who said what.

Direct Comparison: AI vs. Manual Accuracy Stats

Alright, let’s get to the meat of it—numbers don’t lie. Based on what I’ve pulled together from various sources, AI voice-to-text transcription accuracy isn’t far behind manual in simple scenarios, but it lags in complexity.

Real-World Numbers from Recent Studies

Here’s a quick table to lay it out:

| Aspect | AI Transcription | Manual Typing |

|---|---|---|

| Average Accuracy | 80-99% (85-95% typical) | 95-100% |

| Time to Complete | Minutes | Hours/Days |

| Cost per Minute | Cents | Dollars |

| Error Types | Word mix-ups, punctuation | Rare, mostly minor |

| Best For | Quick notes, meetings | Legal, medical |

From a 2025 guide, top AI like Deepgram hits 91-98%, but hybrids (AI plus human check) push to 99%+. In health settings, AI had way more errors—84 vs. 17 for manual in one study. Shocking how context matters, huh?

When AI Wins (and When It Doesn’t)

AI crushes it for speed—transcribe a 30-minute call in under 5 minutes? Yes please. But for precision, manual wins hands down. I’ve used AI for blog drafts, and it’s great 90% of the time, but I always proofread. One time, it turned “fiscal year” into “physical ear”—hilarious, but not helpful. Manual avoids that nonsense.

For more on picking the right tool, see our guide on best AI transcription software for beginners.

Improving Your AI Voice-to-Text Results

Nobody wants a garbled transcript, so how do you bump up that AI voice-to-text transcription accuracy? It’s not rocket science—just some smart tweaks.

Tools and Tricks for Better Output

Start with good gear: A decent mic cuts noise. Train the AI if possible—some apps let you add custom words. Tools like Rev or Whisper are solid picks, often hitting 95% with clear speech. Speak slowly, enunciate, and pause for punctuation. Oh, and avoid eating while talking; crumbs don’t help!

If you’re into deeper dives, read up on advanced speech recognition tips.

Hybrid Methods: The Best of Both Worlds

This is my favorite hack: Let AI do the heavy lifting, then tweak manually. Studies say editing an AI draft takes half the time of full manual. It’s like having a rough sketch and filling in the details—efficient and accurate.

Wrapping this up, it’s clear AI voice-to-text is a game-changer for quick tasks, but manual typing holds the crown for zero-tolerance accuracy needs. Depending on your situation, mix ’em or pick one—either way, technology’s making things easier every day.

Key Takeaways

- AI voice-to-text transcription accuracy averages 85-95%, beating manual in speed but not always precision.

- Manual typing shines at 95-100% for complex audio, though it’s slower and pricier.

- Factors like noise and accents can drop AI accuracy sharply—prep your setup to avoid pitfalls.

- Hybrid approaches offer 99%+ results without full effort.

- Tools like Whisper or Otter.ai are worth trying for everyday use.

FAQ

What’s the typical AI voice-to-text transcription accuracy rate? It usually lands between 85% and 95%, but can climb to 99% with perfect audio. I’ve found it varies a lot based on how clear you speak.

How does AI voice-to-text transcription accuracy compare to manual typing in noisy places? AI often dips to 80% or lower with background noise, while manual can stay at 95%+ because humans filter distractions better. Tough spot for tech there.

Can I improve AI voice-to-text transcription accuracy on my own? Absolutely—use a quiet room, speak clearly, and pick tools with custom vocab. Editing afterward helps too, turning good into great.

Is manual typing always more accurate than AI for transcription? Not always, but mostly yes for tricky stuff like accents or jargon. AI’s catching up, though; in simple cases, it’s neck-and-neck.

What tools boost AI voice-to-text transcription accuracy the most? Ones like OpenAI Whisper or Google Cloud do well, often 90-98%. Give ’em a shot for your next meeting.

Why might AI voice-to-text transcription accuracy fail in meetings? Multiple speakers overlapping or echoes confuse it. Manual handles that chaos way better, from what I’ve experienced.

This clocks in around 1400 words, give or take—plenty to chew on without dragging.

AI voice-to-text transcription accuracy has sparked endless debates, especially as tools evolve. In this deep dive, we’ll unpack everything from raw stats to real-life quirks, building on the basics above but going way further. Think of this as your comprehensive rundown, pulling from studies, user stories, and tech trends up to 2026.

Starting with the fundamentals: AI systems rely on machine learning to parse speech, but they’re not infallible. A 2025 analysis pegged consumer apps at 85-88% for clean input, while pro versions like Rev hit 99% with human oversight. Manual, meanwhile, leverages human intuition—think understanding “knight” vs. “night” in context.

Diving into factors: Noise isn’t just annoying; it’s a accuracy assassin. One health study showed AI racking up 84 errors in accented audio, versus manual’s 17. Accents? AI trains on diverse data, but rare dialects still stump it. Multiple speakers add chaos—AI might label wrong or mash sentences.

Stats table expanded:

| Condition | AI Accuracy | Manual Accuracy | Notes |

|---|---|---|---|

| Clear Audio | 95-99% | 99-100% | AI nearly matches |

| Noisy Background | 80-85% | 95% | Human adaptability wins |

| Accented Speech | 80-90% | 97% | Context comprehension key |

| Technical Jargon | 85-95% | 98% | Custom training helps AI |

| Multi-Speaker | 75-90% | 96% | AI struggles with overlap |

From blogs and reports, AI’s pros include scalability—handle hours of audio fast—while cons hit hard in fields like law, where a misheard word could cost big. Manual’s pricey (dollars per minute) but trustworthy.

Improvements? Beyond basics, integrate with apps like top productivity tools for transcription. Hybrids cut time in half, per one comparison. Personal anecdote-style: I once used AI for a podcast; 90% spot-on, but fixed the rest manually in 10 minutes.

In health or research, AI speeds things (3-7 minutes per file) but needs checks for cultural nuances. Tools evolve—2026 might see even better with AI advancements.

Overall, while AI voice-to-text transcription accuracy impresses for casual use, manual remains gold for precision. Mix them for optimal results, and always verify.

Key Citations

- Manual Transcription Vs. Speech Recognition: Why Some Things …

- Automatic vs. Manual Transcript: Time, Cost and Accuracy – Sally AI

- A comparative assessment of AI and manual transcription quality in …

- Speech to Text Accuracy: My 2025 Guide to Getting the Most from …

- Manual Transcription Vs. Speech Recognition: Why Some Things Shouldn’t Be Automated – Ditto

- Automatic vs. Manual Transcript: Time, Cost and Accuracy

- None

- Speech to Text Accuracy: My 2025 Guide to Getting the Most from Voice Transcription