AI in CCTV face identification has come a long way, but it’s far from perfect—research suggests it can help forensics teams narrow down suspects from blurry footage, though error rates climb in real-world scenarios, especially with poor lighting or angles. It seems likely that while accuracy tops 99% in ideal lab tests, field use drops due to biases affecting people of color and women more often. The evidence leans toward treating it as a helpful lead generator rather than slam-dunk proof, given cases of wrongful arrests. Privacy worries add another layer, with unchecked surveillance potentially tracking everyday folks without consent.

Key Benefits and Drawbacks

- Boosts investigations by quickly scanning vast databases, like matching CCTV grabs to mugshots.

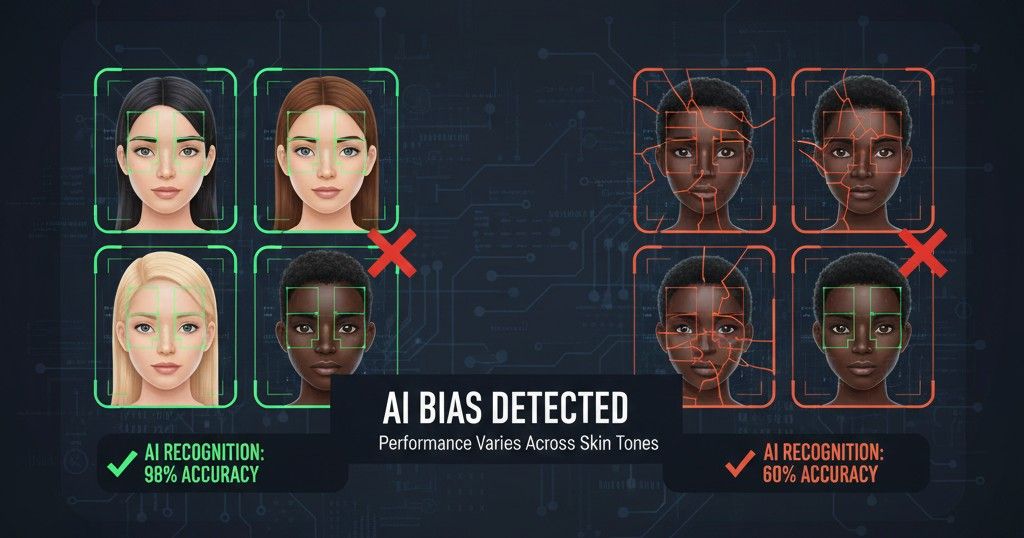

- But biases mean higher misidentification for darker-skinned individuals—up to 34 times more error-prone in some studies.

- Serves as support in court, not standalone evidence; always needs human review.

- Raises red flags on data misuse, like in immigrant tracking or public monitoring.

How It Fits in Forensics Think of AI in CCTV face identification as a sidekick for detectives. It analyzes pixels to spot patterns in faces, pulling possible matches from arrest records or licenses. Success stories include nabbing child predators or exonerating the innocent, but flops like false arrests highlight why it’s no magic fix. For more, check reliable sources like NIST reports on algorithm performance.

Privacy and Ethical Angles It’s a double-edged sword—handy for catching bad guys, but creepy when cameras log your every move. Laws are catching up, with states adding guardrails, yet concerns linger about overreach in places like New Orleans’ live feeds. Balance is key; use it wisely to avoid eroding trust.

AI in CCTV Face Identification:

You’ve probably seen those crime shows where a blurry CCTV clip gets enhanced by some high-tech AI, and boom—the suspect’s face pops up with a perfect match. It’s exciting stuff, but in the real world, AI in CCTV face identification isn’t quite that seamless. Sure, it can speed up investigations and provide solid leads for forensic teams, but it’s got its share of glitches, biases, and privacy headaches that make it more of a handy assistant than a foolproof hero. Let’s break this down without the hype, drawing from actual cases and tech insights to see where it shines and where it falls short.

Understanding AI Face Identification in CCTV Systems

At its core, this tech is about teaching computers to spot and compare faces in video feeds. It’s not magic; it’s algorithms crunching data points like eye distance, nose shape, and jawline from CCTV footage. But CCTV cameras aren’t always crystal clear—think grainy night shots or people turning away—and that’s where things get tricky.

The Basics of How It Works

AI systems pull frames from surveillance videos, then run them through databases of known faces, like mugshots or driver’s licenses. Top algorithms hit over 99% accuracy in controlled tests, matching or beating fingerprint tech. In practice, though? Factors like lighting, angle, or even a hat can drop that rate. I’ve heard of setups where the system flags potential matches, but a human analyst has to double-check—kind of like how you’d squint at a fuzzy photo before saying, “Yeah, that’s him.”

Integration with Forensic Investigations

In forensics, AI in CCTV face identification acts as a starting point. Police might feed a suspect’s image from a robbery video into the system, which scans millions of records for hits. It’s helped in real spots, like identifying victims in shootings or linking crimes across states. But courts are wary; it’s not admissible as ironclad proof without backups like DNA or witnesses, because errors can lead to big mistakes.

Real-World Applications and Success Stories

Don’t get me wrong—when it works, it’s impressive. Law enforcement has racked up wins using this tool to crack tough cases. For instance, in Scranton, Pennsylvania, it nailed a guy assaulting a teen online by matching his pic to a state database, leading to a quick confession. Or take Fairfax County, Virginia, where it helped bust a child sex trafficker from a social media snap.

Here are a few standout examples in a quick list:

- Exonerating innocents: In Florida, it cleared a man accused of vehicular homicide by finding a witness who proved he wasn’t driving.

- Tackling trafficking: California rescuers used it to spot a missing girl in sex ads, getting her out safely.

- Catching predators: The FBI ended a 16-year manhunt for a child assaulter via passport photo matches.

- Solving violent crimes: Baltimore cops ID’d an assault suspect from bus footage, wrapping the case fast.

If you’re curious about the tech in action, check out this video on how AI facial recognition is being used to track down criminals: ( Video )

It shows some practical setups without overdoing the drama.

In gang busts, like in Maryland, it linked suspects with tattoos to organized theft rings, preventing more violence. These stories show the potential, but they’re balanced by the need for careful handling.

The Flip Side: Limitations and Challenges

Okay, now for the not-so-glamorous part. AI in CCTV face identification has real hurdles that can trip up investigations and hurt innocent people. It’s not about bashing the tech—it’s about being honest so we use it right.

Accuracy Issues in Everyday Use

Lab tests boast 99.92% accuracy for the best systems, but CCTV footage? Often blurry or off-angle, pushing error rates up. NIST evaluations note that while top algorithms handle huge databases well, real-world factors like motion blur or low resolution can make matches unreliable. One expert put it bluntly: “It’s a lead, not evidence.” And false positives? They happen, sometimes leading to wrongful arrests.

Bias and Fairness Problems

Here’s where it gets thorny. Studies show the tech misfires more on people of color, women, and nonbinary folks. Error rates for light-skinned men? Around 0.8%. For darker-skinned women? Up to 34.7% in older tests. A 2019 federal review confirmed it’s best for middle-aged white guys, with Black and Asian faces 10-100 times more likely to get mismatched. Why? Training data often skews white and male, plus mugshot databases overrepresent minorities due to arrest disparities.

Take Porcha Woodruff’s case—she was eight months pregnant when Detroit police arrested her for carjacking based on a bad AI match. All six known wrongful accusations from this tech? Black individuals. It’s a pattern that screams for better, more diverse datasets.

Privacy Concerns That Can’t Be Ignored

Surveillance creep is real. AI-powered CCTV can track your face across cities without you knowing, raising huge privacy red flags. In New Orleans, over 200 cameras run live facial recognition by a nonprofit, sparking debates on who controls the data. Misuse risks include identity theft or targeting vulnerable groups, like immigrants—ICE has used it for arrests leading to family separations.

Key worries in a table for clarity:

| Concern | Description | Example Impact |

|---|---|---|

| Data Storage | Biometric info held indefinitely without consent | Hackers could steal millions of faces, enabling fraud |

| Misuse by Authorities | Tracking protests or personal habits | Chills free speech; violates Fourth Amendment rights |

| Over-Surveillance in Communities | More cameras in minority neighborhoods | Amplifies racial profiling and distrust |

| Lack of Regulation | Few laws on how data is shared or deleted | Leads to unchecked power, like in health or abortion tracking |

It’s stuff that makes you pause—handy for security, but at what cost to everyday privacy?

Moving Forward: Best Practices for Responsible Use

To make AI in CCTV face identification a true asset, we need smarter approaches. First off, always pair it with human oversight—no arrests based solely on AI hits. Train systems on diverse data to cut bias, and run regular audits like NIST’s ongoing tests. States are stepping up with laws requiring warrants for searches or banning it in sensitive spots.

For forensics pros, treat it like any tool: verify matches with other evidence, disclose methods in court, and stay transparent. As one report notes, “Question assumptions—it’s a narrow window to avoid past forensic flops.” And for more on balancing AI ethics, check our internal piece on emerging tech risks here.

Conclusion

Wrapping this up, AI in CCTV face identification offers real value in forensics, from speeding up leads to solving cold cases, but it’s no silver bullet. The biases, accuracy dips, and privacy pitfalls remind us to handle it carefully. By focusing on ethical use and better regs, we can harness its strengths without the downsides. It’s about progress, not perfection—keeping people safe while respecting rights.

Key Takeaways

- AI boosts forensic efficiency but requires human checks to avoid errors.

- Bias hits harder on minorities and women; diverse training data is crucial.

- Privacy risks demand strong laws to prevent misuse.

- Successes like trafficking busts show potential, but wrongful arrests highlight dangers.

- Always verify AI outputs with multiple sources for trustworthy results.

FAQ

How accurate is AI face identification in real CCTV footage?

It can hit 99% in labs, but drops in the field due to blur or angles—think 96% misIDs if used alone, per some police chiefs.

Does this tech really have racial bias?

Yeah, studies show it’s way off for darker skin, up to 100 times more errors than for white faces. It’s from skewed training data, but fixes are underway.

What about my privacy with all these cameras?

Big concern—cameras can track you without permission, leading to data breaches or government overreach. Push for laws that require consent or limits.

Can AI alone convict someone?

No way; it’s just a tool for leads. Courts need more, like witnesses or DNA, because false positives happen.

How has it helped in actual crimes?

Plenty—helped rescue trafficked kids, catch predators, even exonerate the wrongfully accused by finding better matches.

Is it getting better over time?

Definitely; algorithms improve with more data, but we still need oversight to tackle biases and ethics.

Key Citations:

- What Science Really Says About Facial Recognition Accuracy and Bias Concerns

- A Forensic Without the Science: Face Recognition in U.S. Criminal Investigations

- When Artificial Intelligence Gets It Wrong

- Facial Recognition Success Stories

- Biased Technology: The Automated Discrimination of Facial Recognition

- Arrested by AI: Police ignore standards after facial recognition matches

- Facial Recognition and Privacy: Concerns and Solutions

- Live cameras are tracking faces in New Orleans

- AI Cameras and Facial Recognition: Balancing Security and Privacy

- How Accurate are Facial Recognition Systems – and Why Does It Matter?

- Accuracy and Fairness of Facial Recognition Technology in Low-Quality Images

- AI as a decision support tool in forensic image analysis

- How AI facial recognition is being used to track down criminals