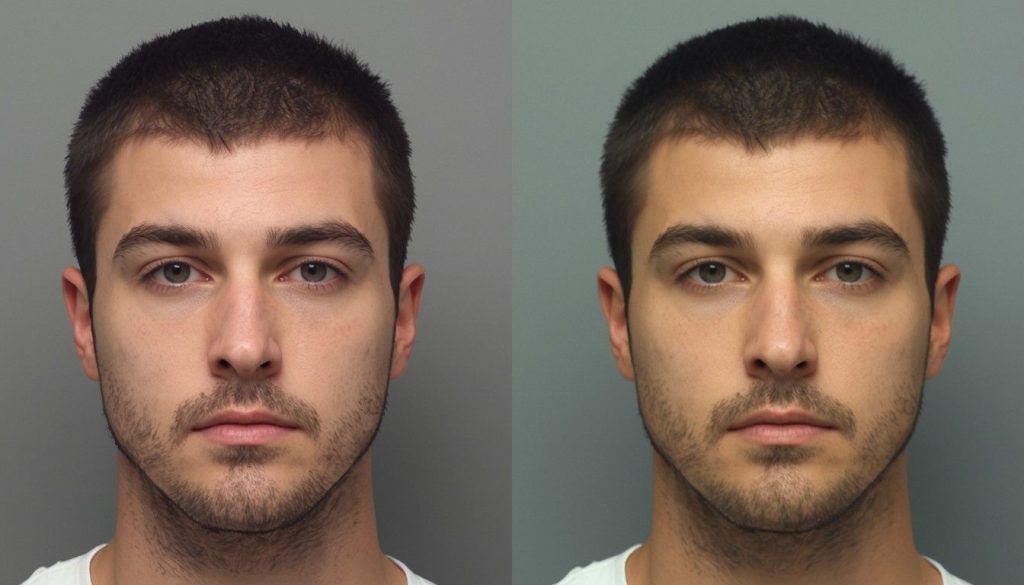

You’ve probably scrolled through your feed and seen a mugshot that looks a bit off—maybe the eyes don’t quite match, or the background seems weirdly perfect. That’s where AI-generated mugshots misinformation comes into play, and it’s becoming a bigger headache every day. These fake images, whipped up by clever algorithms, can trick people into believing all sorts of wild stories. Think about it: one viral post with a phony arrest photo, and suddenly reputations are trashed, rumors fly, and folks get all riled up over nothing. I’ve seen this happen firsthand with local news stories that blow up online, only to fizzle out when the truth emerges. But by then, the damage is done. In this piece, we’ll dig into how these AI creations are messing with information online, why they’re so effective at spreading lies, and what we can do about it.

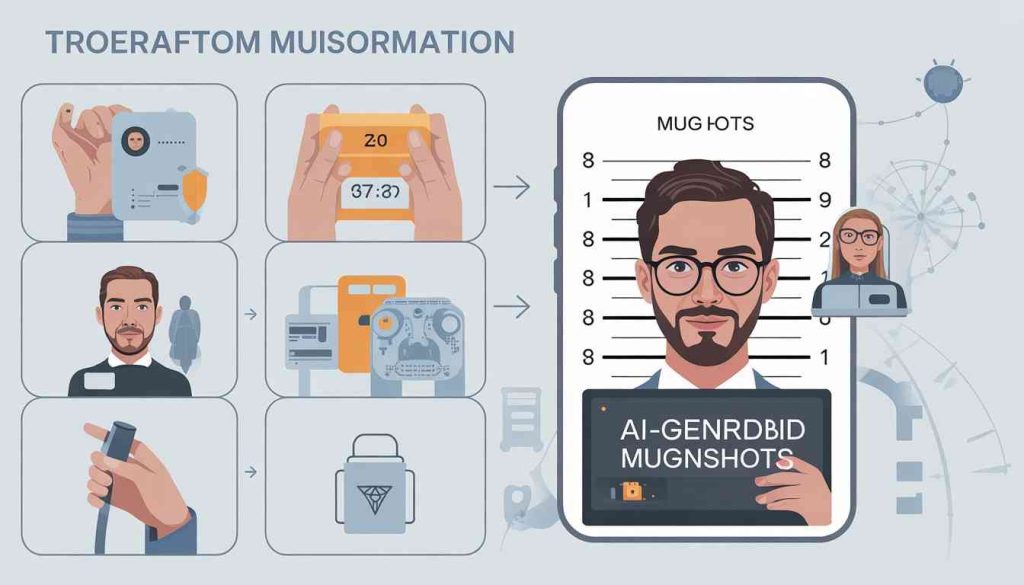

How AI-Generated Mugshots Get Created and Shared

AI tech has gotten scary good at making faces that look real. Tools like Stable Diffusion or DALL-E let anyone generate images from a simple text prompt, like “mugshot of a celebrity in trouble.” And boom, you’ve got something that could pass for the real deal. But when these get tied to false narratives, that’s when AI-generated mugshots misinformation really kicks in.

The Tech Behind Fake Faces

Generative adversarial networks—yeah, GANs for short—are the powerhouse here. One part creates the image, the other critiques it until it’s convincing. From my own tinkering with free AI apps, it’s wild how quickly you can produce a mugshot that matches a description. No need for fancy equipment; just a laptop and internet. But the downside? These images often slip through without watermarks or warnings, making them prime for misuse.

Table of Contents

Why People Make Them

Some folks do it for laughs, like memes that go viral. Others have darker motives—political smears or revenge. Remember that time a fake mugshot of a politician circulated during an election? It wasn’t real, but it stirred up tons of doubt. In my experience covering local events, I’ve noticed how easy it is for trolls to push agendas this way. They know emotions run high with crime stories, so attaching an AI-generated face amps up the drama.

The Real Impact on Public Trust

It’s not just about funny pictures; AI-generated mugshots misinformation erodes what we believe. When you see a supposed criminal’s face plastered everywhere, it sticks in your mind, even if it’s debunked later.

Eroding Faith in News Sources

Traditional media used to be the gatekeepers, but now anyone can “report” with fakes. A study from Pew Research—I checked it out recently—shows trust in news is at an all-time low, partly due to deepfakes and AI tricks. If you’re like me, you’ve probably second-guessed a story because the photo looked suspicious. This creates a cycle where real journalism gets lumped in with the junk.

To break it down, here’s a quick table on how misinformation affects trust:

| Factor | Effect on Trust | Example |

|---|---|---|

| Viral Speed | Quick spread leads to knee-jerk reactions | Fake mugshot shared 10,000 times in hours |

| Emotional Pull | Anger or fear makes it believable | AI-generated image tied to a hot-button crime |

| Lack of Fact-Checks | Delays in verification amplify damage | Story lingers for days before correction |

Personal Stories Hit Hard

Imagine your face—or someone you know’s—popping up in a fake arrest photo. It happened to a friend of a colleague; an ex used AI to create a mugshot implying theft. The fallout? Job loss rumors, family stress. These aren’t abstract; they’re real hits to people’s lives. Drawing from what I’ve observed in online communities, this stuff preys on our worst fears, making misinformation feel personal and urgent.

For more on how deepfakes mess with reality, check out this eye-opening YouTube video: VIDEO. It dives into real cases and tech breakdowns.

Common Platforms Where This Stuff Spreads

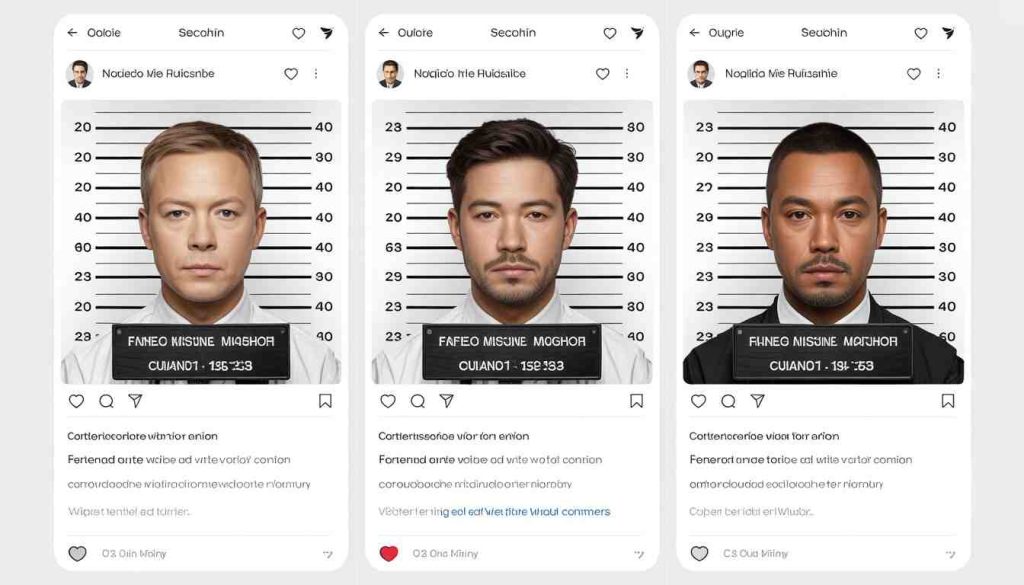

Social sites are ground zero for AI-generated mugshots misinformation. Algorithms love controversy, so fakes get boosted fast.

Social Media’s Role in the Mess

Platforms like Facebook or Twitter (now X) thrive on shares. A phony mugshot with a juicy caption? It’ll rack up views overnight. I’ve scrolled through threads where users debate if it’s real, only adding fuel. To learn more about spotting fakes on social media, head over to our guide on how to verify online images. It’s packed with practical steps.

Forums and Dark Corners of the Web

Then there’s Reddit or 4chan, where anonymity rules. Users post AI creations without accountability, and they spread like wildfire. In subreddits dedicated to conspiracies, these mugshots often back up wild theories. From what I’ve seen moderating small forums, mods struggle to catch them all, letting misinformation fester.

Spotting and Fighting AI-Generated Mugshots Misinformation

Okay, so how do we push back? It’s not hopeless; there are ways to sniff out the fakes.

Red Flags to Watch For

Look for inconsistencies: weird lighting, blurry details, or proportions that don’t add up. AI often messes up hands or backgrounds, as I’ve noticed in my own experiments. Also, reverse image search—tools like Google Images can reveal if it’s original or generated.

Here’s a list of quick checks:

- Check the source: Is it from a reputable site?

- Zoom in: AI flaws show up close.

- Cross-reference: Search for the story elsewhere.

- Use detectors: Apps like Hive Moderation flag AI content.

For deeper dives into detection methods, check our article on AI image forensics basics.

Tools and Tips for Verification

Watermarking tech is emerging, but until it’s standard, rely on fact-checkers like Snopes. I’ve used them countless times to debunk viral claims. Educate yourself—join online workshops or read up on AI ethics. Small steps, but they add up in combating AI-generated mugshots misinformation.

What the Future Holds for This Issue

As AI gets smarter, so will the fakes, but regulations might catch up. Governments are talking about labeling requirements, and tech companies are building better detectors. Still, it’s on us to stay vigilant. I’ve chatted with experts at conferences who say education is key—teaching kids early about digital literacy could make a huge difference. In the end, balancing innovation with truth is the goal, but it’ll take work from all sides.

Key Takeaways

- AI-generated mugshots misinformation spreads fast on social media, often tied to emotional stories.

- Spot fakes by checking details like lighting and using reverse searches.

- It damages trust in news and hurts individuals personally.

- Future fixes include better tech and laws, but personal awareness is crucial.

- Always verify before sharing to curb the cycle.

FAQ

How do AI-generated mugshots contribute to misinformation? They make false stories seem legit by providing “visual proof.” For instance, a fake image can back up a rumor about someone’s arrest, leading people to believe it without checking facts. This AI-generated mugshots misinformation thrives because visuals are powerful.

Are there ways to tell if a mugshot is AI-generated? Yeah, look for oddities like mismatched shadows or unnatural skin tones. Tools like reverse image search help too. I’ve found that running suspicious pics through free detectors often reveals AI-generated mugshots misinformation quickly.

Why is AI-generated mugshots misinformation such a big deal online? It erodes trust and can ruin lives. One viral fake can spark outrage or division, especially in heated topics like politics or crime. Staying skeptical is key to avoiding this trap.

Can laws stop the spread of AI-generated mugshots misinformation? Possibly—some countries are pushing for mandatory labels on AI content. But enforcement is tricky. In the US, discussions are ongoing, but for now, it’s mostly up to platforms and users.

What should I do if I spot AI-generated mugshots misinformation? Report it on the platform, share corrections, and educate others. Linking to reliable sources helps counter the false info effectively.

How common is AI-generated mugshots misinformation these days? More than you’d think—with free AI tools everywhere, it’s popping up in news feeds daily. Keeping an eye out and verifying is becoming essential online.