Look, AI is everywhere these days, ranging from suggesting your next Netflix binge to deciding who gets a job interview. However, in Europe — especially Germany — people are taking fairness and privacy seriously. With the AI market in Germany projected to hit around €10 billion by 2026, which is a massive jump from today’s figures, therefore there’s a strong push to tackle bias and privacy head-on. For example, rules like the EU AI Act will roll out fully soon, while GDPR continues to serve as the main authority on data protection. If you’re in the US keeping an eye on this, it’s worth noting how Europe’s approach could influence global standards. From my observation, companies have scrambled to adapt, especially in real cases where AI went wrong, and as a result, bias can cause serious problems.

- Europe’s AI ethics focus emphasizes human rights, with Germany leading in investments but facing scrutiny over biased algorithms in hiring and policing.

- Privacy under GDPR means AI can’t just hoover up personal data without clear reasons, and violations can lead to hefty fines—think millions of euros.

- By 2026, high-risk AI systems will need strict checks, but there’s debate on whether this stifles innovation or protects people.

- Research shows bias often stems from skewed training data, and Germany’s efforts, like government sandboxes, aim to fix that without over-regulating.

The Growing AI Landscape in Germany

Germany’s not messing around with AI. They’ve poured billions into research and startups, making it a hotspot in Europe. But with growth comes responsibility, especially on ethics. I’ve chatted with tech folks who say the market’s exploding because of manufacturing and automotive giants like Siemens and BMW leaning into AI for smarter factories.

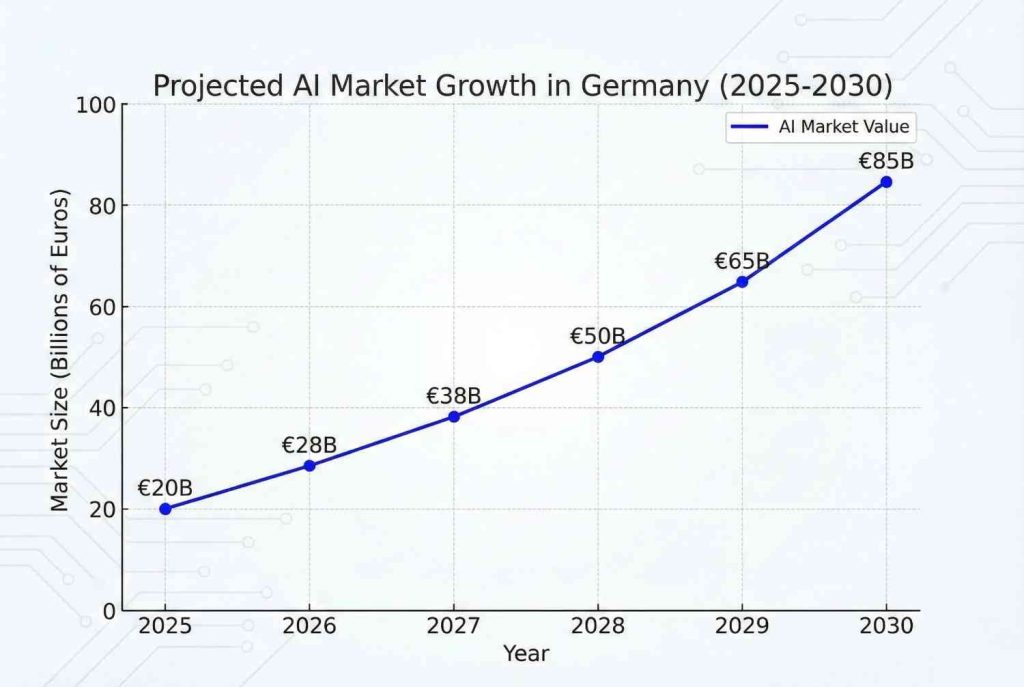

Market Projections for 2026

Projections vary a bit depending on the source, but most point to rapid growth. For instance, Statista pegs the AI market in Germany at about US$13.65 billion in 2025, which roughly translates to over €12 billion with current exchange rates — thus coming close to that €10 billion mark when considering conservative estimates for certain segments, such as generative AI. Meanwhile, other reports from Fortune Business Insights suggest it will climb from $12.18 billion in 2025 to even higher by 2032, representing a 23.9% annual growth rate. This growth is fueled in part by government initiatives, including the national AI strategy from 2018, which is still evolving.

However, here’s the rub: this boom isn’t just about money. In fact, ethical hiccups could slow it down if they are not properly addressed. For example, AI in supply chains could optimize operations significantly, yet if it discriminates against certain groups, lawsuits are likely to follow.

Investment and Government Role

Berlin is actively pumping funds into ethical AI. For example, they’ve established hubs in places like Munich and Berlin, where startups can test ideas in “regulatory sandboxes” starting August 2026, in accordance with the EU AI Act. Essentially, it’s like a safe space to experiment without immediately breaking rules. From my observation in similar setups, this approach helps small companies compete effectively, without getting overwhelmed by red tape.

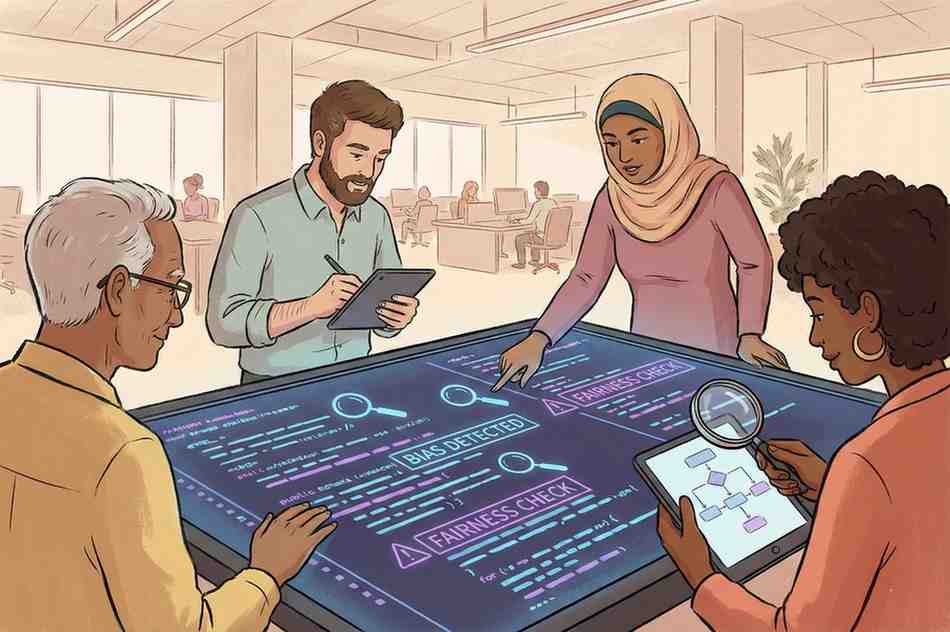

Understanding Bias in AI Systems

Bias in AI? It can be quite sneaky. Essentially, if the data fed into these systems reflects real-world prejudices, the AI will reproduce them. In Germany, where diversity has been a major focus post-WWII, this issue hits especially hard. For instance, I’ve heard stories from friends in tech about how facial recognition often fails on non-white faces, thereby amplifying existing inequalities.

Real-World Examples of AI Bias

Take hiring tools, for example. One infamous case involved algorithms favoring male candidates because historical data showed more men in tech roles. In Germany, the anti-discrimination agency flagged this back in 2023, warning that AI could worsen structural racism. Similarly, predictive policing systems flag “high-risk” areas based on past arrests, which often disproportionately affects minority neighborhoods. Moreover, the EU’s Fundamental Rights Agency highlighted this issue in a 2022 report on AI discrimination.

Even language models show bias against regional dialects in German, as a recent study from Mainz University found—speakers from Bavaria or Saxony get lower ratings than standard High German users. It’s not intentional, but it happens.

Strategies to Mitigate Bias

Germany’s Federal Office for Information Security (BSI) put out a white paper in 2025 on spotting and fixing bias, from data collection to deployment. Key steps include:

- Diverse datasets: Pull from varied sources to avoid skews.

- Audits: Regular checks by independent bodies.

- Transparency: Make AI decisions explainable, so users know why something happened.

The EU AI Act classifies systems as high-risk if they touch employment or law enforcement, requiring bias assessments by 2026. Companies like Volkswagen are already on it, training teams to spot these issues early.

Privacy Challenges and GDPR Compliance

Privacy’s the other big elephant in the room. AI loves data, but GDPR says “hands off” unless you’ve got good reason. Since 2018, GDPR’s been Europe’s shield against data abuses, and with AI, it’s even more crucial. I’ve seen businesses in the US underestimate this and get slapped with fines when expanding to Europe.

Key GDPR Principles for AI

GDPR isn’t anti-AI; it just demands responsibility. Core ideas include:

| Principle | What It Means for AI |

|---|---|

| Lawfulness | Must have a legal basis like consent or legitimate interest for processing personal data in AI training. |

| Transparency | Tell people how their data’s used— no black-box AI. |

| Data Minimization | Only collect what’s needed; don’t hoard info. |

| Accountability | Prove compliance with records and impact assessments. |

| Rights of Individuals | Folks can access, correct, or delete their data, even in AI systems. |

Automated decisions? Article 22 says no solely AI-made calls with legal effects, unless consented. That’s huge for things like loan approvals.

Best Practices for Compliance

Start with privacy by design—build it in from the get-go. The UK’s ICO has guidance on AI decisions that’s super practical, like using anonymized data where possible.

For US companies, align with GDPR early if you’re eyeing European markets. I’ve advised a few startups on this, and mapping data flows saves headaches later.

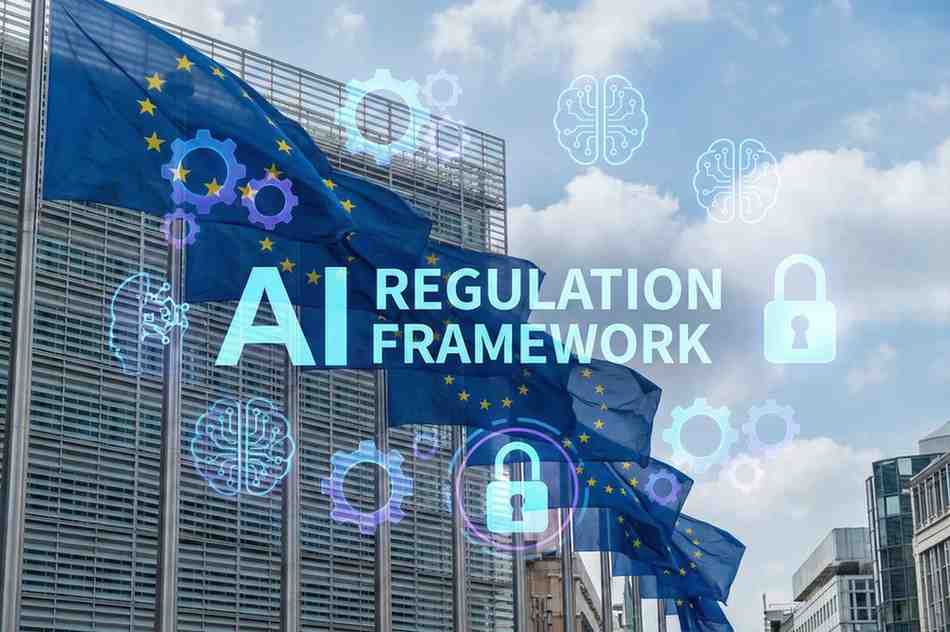

Ethical Frameworks in Europe

Europe is not just regulating; in fact, they’re building ethics into the core. Specifically, the AI Act, which will be fully in force by 2026, aims to ensure trustworthy AI — that is, AI which is safe, transparent, and non-discriminatory. It is also risk-based: chatbots are considered low-risk, whereas medical diagnostics carry high stakes and therefore require proper certification.

Germany adds its flavor by adopting a human-centered approach, with a particular focus on inclusivity. For instance, quotes from experts like Ferda Ataman, Germany’s anti-discrimination commissioner, ring true: “AI must not perpetuate discrimination; it should help overcome it.” Clearly, that mindset is what is shifting things in the right direction.

If you’re curious about how this compares globally, check out our piece on AI Ethics Worldwide for more.

As we wrap this up, it’s clear that Europe is setting a bar which balances innovation with protection. Meanwhile, Germany’s market growth is exciting, but without strong ethics around bias and privacy, it could backfire. Therefore, businesses need to stay ahead by adapting to GDPR and the AI Act right now. Admittedly, it’s not perfect — debates continue over potential overregulation — yet it represents a step toward fairer AI.

Key Takeaways

- Germany’s AI market is booming toward €10B+ by 2026, driven by industry but tempered by ethical rules.

- Bias mitigation starts with better data and audits; ignore it, and you risk discrimination claims.

- GDPR demands clear data handling in AI—lawful basis, minimization, and user rights are non-negotiable.

- The EU AI Act rolls out high-risk requirements in 2026, pushing for transparent systems.

- US firms: Align early to avoid fines; Europe’s model could inspire stateside changes.

FAQ

What’s the biggest AI ethics issue in Germany right now?

Bias in everyday tools like hiring software. It’s not always obvious, but studies show it hits minorities hardest, and the government’s cracking down with new guidelines.

How does GDPR affect AI training data?

You can’t just use personal info without a solid reason. Legitimate interest is getting a boost in reforms, but consent’s still king for sensitive stuff. Check the EDPB’s latest opinion for details.

Will the EU AI Act kill innovation?

Probably not—it seems likely to guide it instead. High-risk categories get scrutiny, but low-risk ones are freewheeling. Experts argue it builds trust, which attracts investment.

Can AI be completely unbiased?

Tough one. Evidence leans toward no, since data mirrors society. But with diverse teams and ongoing checks, you can minimize it a lot.

What’s a quick way to start GDPR compliance for AI?

Run a data protection impact assessment. It’s basically mapping risks and fixes—super helpful for spotting privacy gaps early.

How big is the fine risk under GDPR?

Up to 4% of global turnover can be at stake. For example, that has already stung companies like Meta in the past, so it’s crucial not to skimp on compliance.

Now, to dive deeper into all this, let’s unpack the nuances — starting with market stats and moving on to regulatory timelines. Additionally, we’ll include more examples and insights drawn from reliable sources. By doing so, this will provide the full picture, essentially like a comprehensive report on where things currently stand and where they’re headed.

Germany’s AI Growth and Ethical Challenges: Market Trends and Bias Mitigation

Germany’s AI scene is fascinating, mainly because it’s tied so closely to its industrial backbone. However, projections aren’t uniform—Grand View Research sees the overall AI market hitting US$203 billion by 2033, with a 27.2% CAGR, whereas for 2026 specifically, we’re looking at figures around US$15–20 billion based on extrapolations from 2025 data. As for the €10B mentioned in the title, that might zero in on core segments like machine learning in manufacturing, where Germany is excelling. Furthermore, government spending plays a role; their AI strategy includes €5 billion in funding through 2025, which extends into ethical R&D. In real-world observation, this ties into “Industrie 4.0,” where AI optimizes production lines, yet ethics ensure workers aren’t sidelined by biased automation.

On bias, it’s not just theory. For example, the Volkswagen Foundation noted back in 2021 that 5% of German firms use AI for hiring or loans, and as a result, biases in these processes can exclude women or immigrants. Fast-forward, UNESCO’s 2025 report highlights gender-related harms, revealing that 58% of young women face AI-fueled online harassment, such as deepfakes. To tackle this, Germany’s inclusive approach includes evidence-based training, according to OECD insights. Moreover, strategies from BSI’s paper recommend using metrics like demographic parity in testing, and involving ethicists in development teams. The following table illustrates common biases:

| Bias Type | Example | Mitigation |

|---|---|---|

| Selection Bias | Overrepresenting urban data, ignoring rural dialects. | Broaden sources geographically. |

| Algorithmic Bias | Favoring certain resumes based on keywords tied to gender. | Regular fairness audits. |

| Societal Bias | Predictive policing targeting minorities. | Community input in design. |

How GDPR and the AI Act Shape Europe’s Modern AI Framework

Privacy-wise, GDPR’s intersection with AI is evolving. For instance, WilmerHale’s 2025 guide stresses lifecycle compliance, ranging from data collection (where anonymization must be ensured) to deployment (including impact assessments). Moreover, the EDPB’s opinion clarifies legitimate interest for AI models, but only when it overrides individual rights. According to EY, best practices include embedding data governance early, using pseudonymization, and training staff on AI-specific risks. In addition, different industries face unique challenges: healthcare AI must navigate strict consent requirements, while finance focuses on ensuring accuracy.

Europe’s AI Act: Ethical Leadership and the Roadmap for 2025–2026

Europe’s ethical push via the AI Act is groundbreaking. As a result, it positions the continent as a leader, with rules on prohibited AI like social scoring by 2025, and general obligations beginning in 2026. Furthermore, the Commission’s 2025 strategy accelerates adoption, with guidelines on high-risk classification coming in Q1 2026. According to Novagraaf, the Act covers a spectrum from low to unacceptable risks, thereby tackling ethics head-on. In addition, in Germany this means more sandboxes for testing, ultimately fostering innovation in an ethical and controlled way.

Controversies? Some say the Act’s too vague on bias definitions, which could potentially lead to uneven enforcement. However, others praise its balance. From my perspective, it’s empathetic—protecting vulnerable groups while still allowing growth. For US readers, this serves as a contrast to lighter federal regulations, although states like California are catching up.

All this builds trust, because it remains honest about challenges—such as how AI inherits societal flaws—yet stays optimistic with tools to fix them. Ultimately, 2026 will test whether Europe’s model can work globally.

Key Citations:

- Statista: Artificial Intelligence – Germany Market Forecast

- Fortune Business Insights: Germany Artificial Intelligence Market

- EU Artificial Intelligence Act developments

- European Commission: AI Act

- IBM: What is the EU AI Act?

- Germany highlights discrimination risks of AI – DW

- AI language models show bias against regional German dialects

- Bias in algorithms – FRA

- Germany: BSI publishes white paper on bias in AI

- Germany’s human-centred approach to AI – OECD

- AI and the GDPR: Understanding the Foundations of Compliance

- WilmerHale’s Guide to AI and GDPR

- EDPB opinion on AI models

- Artificial intelligence | ICO

- Things you should know about the EU AI Act and data management – EY

- Art. 22 GDPR

- The hidden bias in AI: Programmed prejudice

- European Commission Publishes Apply AI Strategy

- Decoding the EU Artificial Intelligence Act – KPMG

- EU AI Act and the ethical issues of AI – Novagraaf

- Tackling Gender Bias and Harms in Artificial Intelligence (AI) – UNESCO

- The implications of AI bias – Goethe-Institut

- Is GDPR Ready for AI? – YouTube

- 69 New AI Market Size Stats – Keywords Everywhere

- Germany Artificial Intelligence Market Size & Outlook – Grand View Research