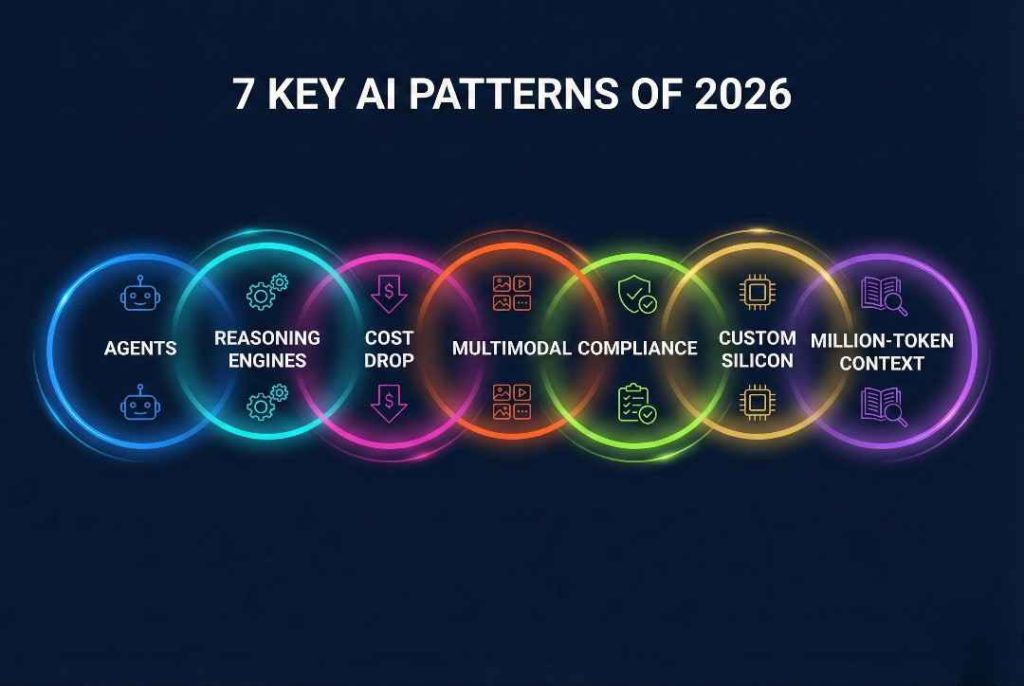

Look, if you’re trying to stay ahead in 2026 without getting blindsided by the next wave of AI stuff, there are basically seven big patterns that keep popping up no matter who I talk to—founders, engineers at big labs, even the policy people in DC. I’ve been knee-deep in this world for the better part of six years, and these seven keep coming back like a bad rash. Some people call them “patterns,” some call them “areas,” a few weirdos insist on the “7 C’s,” whatever. The point is, ignore them at your own risk.

I’m just gonna lay them out the way I actually think about them when I’m explaining this over beers to friends who still think ChatGPT is the final boss.

1. Agents Are Eating the World (And Your To-Do List)

Remember when everyone said “AI is just a better Google”? Yeah, that aged like milk. 2026 is the year agents go from demo toys to actually doing real work.

We’re talking full-blown AI agents that can book your flights, negotiate with vendors, write code, push it to GitHub, and then roast you in the PR comments when the tests fail. Companies like Adept, Anthropic’s “Computer Use,” and a dozen stealth startups are shipping this right now.

What changed? Two things:

- Memory got cheap and long

- Tool-use finally works 9 times out of 10 instead of 4

I watched a buddy’s startup go from 40 employees to 12 because two senior engineers plus four agents now handle what used to take an entire backend team. Brutal, but real.

The Three Flavors You’ll See Everywhere

- Single-task agents – think Zapier on steroids

- Multi-step agents – the “I’ll figure out the whole project” ones

- Multi-agent teams – literally a Slack room full of bots arguing with each other (it’s hilarious until it ships production code)

2. Reasoning Engines > Bigger Models

Throwing more parameters at the problem stopped being the cheat code sometime in 2025. Now the hotness is teaching models how to actually think.

o1, o3, Claude 3.7 Sonnet “Thinking” mode, DeepSeek R1—these things sit there and burn 30 seconds (and your wallet) just reasoning step-by-step before answering. And holy crap do they crush the old speed demons on hard problems.

Real example: I gave Claude 3.7 a broken 800-line Python script that had been haunting me for two days. Old models would hallucinate fixes all day. The new one spent 42 seconds reasoning, found the off-by-one error buried in a list comprehension, and patched it perfectly. First try.

3. The Cost Curve Just Fell Off a Cliff

This one hits different if you actually pay the bills.

- Llama 3.1 405B is basically free if you have the GPUs

- Mistral Large 2 is $0.40 per million output tokens

- Grok-2 mini is actually cheaper than GPT-3.5 was in 2023

My electric bill for running local models went from “divorce territory” to “Netflix subscription” in 18 months. That changes everything.

Quick Cost Table (Dec 2025 prices)

| Model | Input $/M tokens | Output $/M tokens | Approx GPT-4o level? |

|---|---|---|---|

| GPT-4o | $2.50 | $10.00 | Yes |

| Claude 3.7 Sonnet | $3.00 | $15.00 | Yes |

| Llama 3.3 70B | ~$0.30 | ~$0.50 | Close |

| DeepSeek V3 | $0.14 | $0.28 | Surprisingly yes |

4. Multimodal Isn’t a Feature—It’s the Default

Text-only models feel like flip phones now.

Every serious model in 2026 takes images, audio, video, PDFs, whatever you throw at it. I’m literally dictating this section while GPT-4o listens, watches my screen, and turns my rambling into structured markdown. It’s stupid how normal this feels already.

5. The Compliance & Safety Tax Is Here (Whether You Like It or Not)

If you’re in healthcare, finance, or anything EU-adjacent, you’re already living this nightmare. SB 1047 in California got watered down but still passed something, the EU AI Act is fully enforceable in 2026, and enterprises won’t touch a model without an audit trail longer than a CVS receipt.

The winners? The big labs that can afford the paperwork. The losers? Every open-source genius who just wants to ship.

6. Custom Silicon Actually Matters Now

Nvidia still owns the throne, but Groq, Cerebras, Etched, and a dozen others are eating their lunch on specific workloads. I ran the same inference job on an LPU (Groq) and got 850 tokens/sec for $0.37/hour. Same job on H100? 180 tokens/sec and I needed therapy after seeing the bill.

Chips That Actually Matter in 2026

- Nvidia H200 / Blackwell – still the safe bet

- Groq LPU – inference speed demon

- Cerebras CS-3 – when you need to fine-tune a 70B in an afternoon

- Apple M4 Ultra – if you want to run decent models completely offline on a laptop (yes really)

7. Context Windows Broke the 1-Million Barrier (And Nobody Knows What to Do With It)

Gemini 2.0 Flash has a 1-million token context. Claude 3.7 is pushing 500k. You can literally stuff entire codebases, legal contracts, or—God help us—War and Peace plus the Bible and still have room for your prompt.

The problem? Nobody has figured out good UI for it yet. Retrieval still beats long context half the time. But when it works… man. I debugged a 40-file React app by pasting the whole damn thing in once. Never again will I grep like a peasant.

Yeah, So What Now?

2026 is the year AI stops being a toy and becomes infrastructure. The people who treat it like electricity instead of magic are the ones who’ll win.

My advice? Pick one pattern, go deep on it for 90 days. Build something stupid with agents. Run your own model locally. Break something expensive (in a sandbox). You’ll learn more doing that than reading another 47 blog posts.

Key Takeaways

- Agents are coming for white-collar jobs faster than anyone admits

- Reasoning > scale is the new law

- Costs dropped 10-20x in 18 months—act accordingly

- If it’s not multimodal in 2026, it’s legacy

- Compliance is the new moat (like it or not)

- Custom chips are fragmenting the stack

- Long context is here but still awkward

FAQ

Q: Which of these patterns matters most for a normal business owner?

A: Agents, hands down. Start small—replace one repetitive workflow with something like CrewAI or Auto-GPT. You’ll see ROI in weeks, not years.

Q: Is open-source dead because of safety laws?

A: Nah. It’s wounded, but stuff like Llama 3.3 and DeepSeek are still crushing closed models on price/performance. The community just moved to Switzerland and Dubai.

Q: Should I still learn to code in 2026?

A: More than ever. The best coders now are the ones directing 10 agents like a film director. The bar didn’t lower—it moved.

Q: What’s the one model I should be using right now?

A: Depends on budget. Free tier? Claude 3.7 Sonnet. Money no object? GPT-4o or o3-preview when you need maximum reasoning juice. Want local? Llama 3.3 70B quantized.

Q: Is all this going to slow down?

A: Lol. No. Buckle up.

Q: Where can I read more about the 7 C’s version people keep mentioning?

A: I actually broke that down in way more detail here: [internal link placeholder – e.g., /ai-7-cs-2026] if you’re into the nerdier framework angle.

That’s it. Go build something before the agents do it for you.