Look, we’ve all scrolled through our feeds and stumbled on a video that just doesn’t sit right. Maybe it’s a politician saying something wild, or a celebrity endorsement that comes out of nowhere. With AI getting smarter by the day, these fake videos—deepfakes—are popping up everywhere. And with the 2026 midterm elections on the horizon, it’s not just harmless fun anymore. We’re talking about stuff that could sway votes, spread lies, and mess with what we think is real. I’ve watched enough of these to know spotting them isn’t always easy, but there are solid tricks that can help anyone get better at it. Stick with me here, and we’ll break down how to spot fake videos without needing a tech degree.

Understanding Deepfakes

Deepfakes aren’t some sci-fi gimmick; they’re real tools powered by AI that swap faces, voices, or even whole scenes in videos. Think about how easy it is now to edit a photo on your phone—AI takes that up a notch for videos. They use machine learning to study real footage and then generate fakes that look scarily convincing.

How They’re Made

It starts with gathering tons of data on a person’s face or voice from public videos and photos. Algorithms like generative adversarial networks (GANs) pit two AI systems against each other—one creates the fake, the other spots flaws—until the output fools even experts sometimes. But here’s the thing: even the best deepfakes have glitches because AI isn’t perfect at mimicking every tiny human quirk. For instance, I’ve come across clips where the lighting just doesn’t match up, like the sun’s hitting one side of the face wrong.

Real-World Examples

Remember those viral clips during past elections where candidates seemed to say outrageous things? In 2024, audio deepfakes were used in robocalls to mimic voices and discourage voting. Fast forward, and experts are warning that 2026 could see even more sophisticated ones, like fake endorsements or scandal videos timed right before polls open. One report I dug into highlighted how states like California and Florida have passed laws banning deepfakes in election ads, but enforcement is tricky when anyone with a decent computer can whip one up.

The Threat to the 2026 Elections

Elections have always had their share of dirty tricks, but AI amps it up. Deepfakes could flood social media with false narratives, like a candidate admitting to corruption or stirring up division on hot-button issues. Republicans and Democrats alike are worried—bipartisan bills in places like Arizona and Michigan aim to require disclaimers on AI-generated content in campaigns. But let’s be real: not every state has caught up yet. In Maryland, officials are already prepping for 2026 by training staff on detection, after seeing how fast fakes spread in 2024.

The scary part? These fakes prey on our biases. If a video confirms what you already believe, you’re more likely to share it without checking. I’ve fallen for quick glances myself until I paused and looked closer. With midterms deciding control of Congress, a well-timed deepfake could tip close races. Groups like the Brennan Center for Justice have pointed out that without better safeguards, trust in democracy takes a hit. And yeah, it’s not just videos—audio deepfakes in calls or ads are sneaky too.

Practical Ways to Spot Fake Videos

Okay, enough doom and gloom. Let’s get into the nuts and bolts of how to spot fake videos. You don’t need fancy software; a sharp eye and some habits can go a long way. Start by slowing down—don’t just watch and react.

Visual Clues

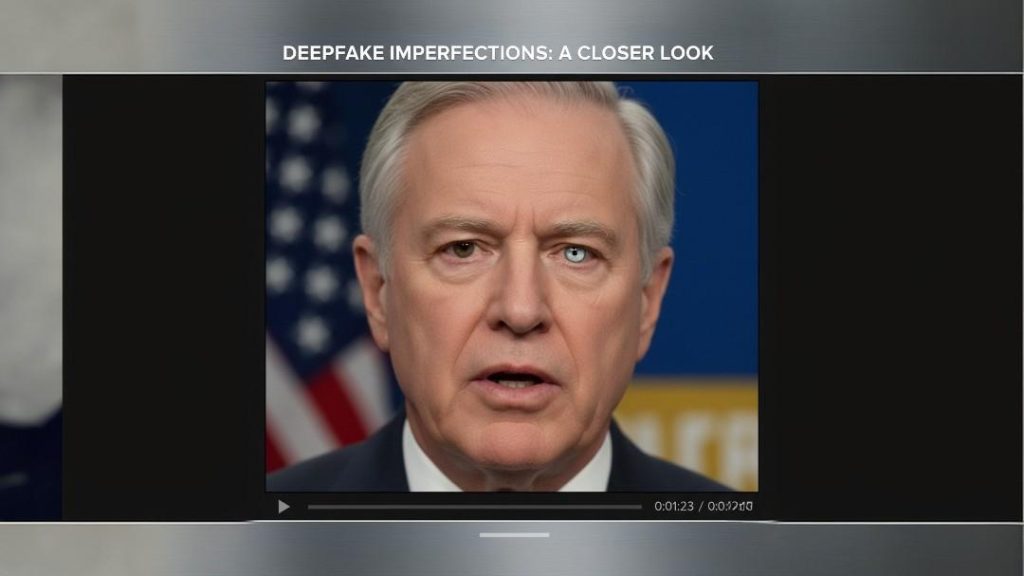

Focus on the face first, since that’s where most deepfakes target. Check the skin: Does it look too smooth, like plastic, or weirdly wrinkled in spots? Eyes and eyebrows are big giveaways—shadows might not line up right, or reflections in glasses could glitch when the head turns. Blinking is another one; real people blink naturally, but fakes might overdo it or skip it entirely. Facial hair or moles? If they seem off, like not casting proper shadows, flag it.

Here’s a quick table of common visual red flags:

| Area to Check | What to Look For | Why It Matters |

|---|---|---|

| Eyes & Brows | Missing reflections or unnatural shadows | AI struggles with light physics |

| Lips & Mouth | Mismatched syncing with words | Lip-sync deepfakes often slip |

| Cheeks & Forehead | Inconsistent skin texture | Age or smoothness doesn’t match rest of face |

| Hands/Body | Extra fingers or jerky movements | AI distorts non-facial elements easily |

Audio and Movement Checks

Listen closely—does the voice sound robotic or out of sync? Background noise might not fit, or words could slur unnaturally. Watch movements: Real videos have fluid turns, but deepfakes can look stiff, like the head jerks oddly. One trick I’ve used is reversing the video search; tools like Google can trace if it’s manipulated from something else.

Tools and Resources for Detection

You’re not alone in this—plenty of free tools help verify videos. Sites like InVID Verification let you analyze clips frame by frame. Or try Microsoft’s Video Authenticator, which scores how likely something is a deepfake. For quick checks, upload to platforms that scan for AI fingerprints, those tiny pixel patterns left by generators.

Want a hands-on demo? Check out this YouTube video from CBC News:

It walks through real election-related deepfakes and quizzes you on spotting them—super eye-opening.

And for more on staying safe online, head over to our piece on digital privacy tips [internal link: /digital-privacy-guide].

Conclusion

Wrapping this up, deepfakes are a growing pain, especially as we gear up for 2026. But arming yourself with these how-to-spot-fake-videos tricks means you’re less likely to get fooled. Stay curious, question everything, and share what you learn—it helps everyone. The tech’s here to stay, but so is our ability to outsmart it.

Key Takeaways

- Deepfakes often fail at natural details like blinking, shadows, and skin texture—train your eye on those.

- Elections amplify risks, with states rushing laws but enforcement lagging; verify before sharing.

- Use tools like reverse searches and authenticators to double-check suspicious videos.

- Bias makes us vulnerable; seek diverse sources to avoid echo chambers.

- Audio mismatches and jerky movements are common slip-ups in fakes.

FAQ

Q: What’s the easiest first step to spot a fake video?

A: Just pause and zoom in on the face—look for weird skin or eye stuff that doesn’t add up. It’s saved me a few times from sharing junk.

Q: Are deepfakes illegal in elections?

A: In many states like California, yeah, especially if they’re used to mislead voters without disclaimers. But it varies, so check your local rules.

Q: Can my phone detect deepfakes?

A: Not built-in, but apps like Truepic or even browser extensions can scan videos you upload. Handy for quick checks.

Q: Why do deepfakes seem worse around elections?

A: Timing’s everything—fakes drop to influence opinions fast. With 2026 midterms, expect more targeting swing states.

Q: What if I think I’ve seen a deepfake online?

A: Report it to the platform, fact-check with sites like PolitiFact, and don’t spread it further. Better safe than sorry.

Q: Do all AI videos have watermarks?

A: Some do, like from tools such as Sora, but not all. Look for bouncing logos or metadata if you’re suspicious.